We Tested the Best Camera SDKs for Mobile AR Apps

Developing camera features from scratch might sound fun until you're stuck writing endless code patches and debugging on 200 Android and 15 Apple devices. Details like latency and rendering speed, as well as device & platform compatibility also matter. So a camera SDK becomes a lifesaver.

A good camera SDK is a toolbox that relieves developers from the low-level camera maintenance. The best Camera SDK does that by default and focuses on stable capture, real-time processing, performance, and seamless extra perks like AR and AI effects across platforms.

Camera SDKs for Android and iOS power more than video and photo editing apps. They can provide:

- real-time filters, trimming/merging, smart processing, and AR effects to social, video editing, and eLearning apps;

- machine vision, inspection, and automation to industrial software and robotics;

- face tracking and virtual try-on to beauty tech and eСommerce;

- motion detection, facial recognition, and streaming optimization to smart homes and IoT;

We’ve handpicked, tested, and compared 4 Camera SDKs across industries to see how they perform and what features they offer. For AR and video, Banuba Camera SDK serves as our reference point, showing what’s currently achievable in real-time processing and AI-powered AR effects.

[navigation]

TL;DR:

- We have compared camera SDKs based on ease of integration, feature sets, platform support, pricing models, and update quality;

- For scientific and industrial uses, software like OpenCV and Google ML Kit fits best, as it provides high-quality low-level functionalities;

- For AR and video editing, Banuba Camera SDK or Banuba Face AR SDK are the most fitting thanks to simple integration, rich feature set, and extensive documentation;

- For smart homes and IoT applications, Tuya or Agora are the best fit.

What Makes a Camera SDK the Best?

How to know if a certain Camera SDK is the best? While preparing this material on Camera SDK comparison, we analyzed specific criteria to evaluate the solutions’ performance, usability, and other metrics. This approach eliminates the bias and can be used as an evaluation framework for your analysis. Below are the core evaluation criteria we took into consideration:

Ease of Integration and Documentation

During our analysis, we examined how simple the integration process is and whether the Camera SDK provides detailed documentation.

This is why it matters when choosing the best Camera SDK for your business:

- Low-code or no-code Camera SDK solutions allow fast, technically painless integrations with minimal developer or tech team involvement. The faster the setup, the lower the development cost.

- Even if the integration process is simple, the documentation is the fundamental pillar that will guide you. Always check if the SDK provides structured documentation with step-by-step guides, sample code, and demo projects to simplify the installation. If the documentation is clear, teams can assign integration tasks to junior or mid-level developers instead of relying on senior engineers, which, again, reduces costs and speeds up delivery.

Platform coverage (Android, iOS, Web)

We tested whether the Camera SDKs are cross-platform and work on Android, iOS, and web.

You might only need a Camera SDK for iOS now, but what will you do as your app grows to launch on other OS? Eventually, you will have to switch to a cross-platform SDK provider. Hello, time-consuming migrations and extra costs! So, when choosing the best Camera SDK, pay attention to its platform compatibility. This will save you tons of maintenance and fuss in the future.

AR/AI Capabilities

The AI camera market is projected to grow at a CAGR of around 25% over the next five years, running hand in hand with augmented reality. The combination of these two technologies is a genuine business win-win. That’s why, when preparing this Camera SDK comparison article, we focused on the presence and quality of AR and AI features.

Why is this important? Because it’s not just another short-term trend over the artificial intelligence hype. AI + AR Camera SDKs are about automation, personalization, security, and competitive advantage. They fuel up use cases ranging from face recognition in surveillance systems to virtual try-on in eCommerce and smart clipping in video editing apps.

Licensing and Pricing Models

Budget differs, I get it. At the MVP stage, it often makes sense to start with a less expensive or even a free open-source SDK to validate your idea. But what are the odds it won't be the 'buy cheap, buy twice' scenario?

Once you begin to scale, those attractive initial choices can quickly lead to higher maintenance costs, unexpected downtime, or even having to switch to a new provider. One way to avoid this is to choose a transparent, flexible, yet scalable pricing and licensing model.

Some commercial SDKs can also limit your growth potential with pitfalls like mandatory long-term contracts and strict usage-based limitations.

That's why we considered the following aspects during our analysis:

- Transparent, predictable pricing;

- Scaling opportunities across platforms and user growth;

- Clear terms on data privacy;

- Flexible billing models suitable for startups and established enterprises alike.

Developer Support and Updates

Even the best Camera SDKs lose value without active maintenance. As we researched, we paid attention to software updates and tech support.

Frequent updates ensure compatibility with new OS updates and camera APIs. Accessible documentation, responsive technical support, and developer communities help businesses resolve issues faster. SDKs backed by an engaged support team save development time, keep projects future-proof, and give you a sense that they've got your back in critical situations.

Camera SDKs for General Mobile Development

Let’s start with the basics. When we refer to general mobile development, we mean apps that use the camera for standard imaging tasks, mainly, taking photos, recording videos, scanning barcodes, or streaming media etc. These operations do not require advanced features like AI and AR, just core camera functionality.

Thus, in this context, “general” doesn’t mean “basic”. It’s that stable, efficient, and flexible pillar that can later be used if developers decide to add more complex features. In mobile development, most basic camera-based projects use native frameworks that handle core camera features.

They are not SDKs in the commercial sense, but they have the same purpose: to give developers access to video and photo pipelines and integrate simple camera functionality into their solutions.

These frameworks can be treated as lightweight mobile camera SDKs without anything extra. For iOS, it’s Apple’s AVFoundation; CameraX or Camera2 APIs are used for Android, and React Native or Flutter are typical for hybrid apps, offering wrappers to expose native APIs via a cross-platform layer.

Pros and Cons of Lightweight Mobile SDKs

Nothing is perfect, and these lightweight mobile camera SDKs for iOS and Android also have their dark sides. Let’s compare the pros and cons.

Benefits:

- Officially supported by OS vendors, which guarantees stability and compatibility;

- No licensing or third-party dependency issues;

- High performance with minimal latency and full control over device hardware;

- Works well as a foundation for integrating more advanced SDKs later.

Trade-offs:

- Steeper learning curve and more manual coding, testing, and debugging;

- No built-in AR, AI, beautification, or other similar features;

- Platform-specific implementation, which requires separate logic for Android and iOS unless you use a hybrid wrapper;

- More time- and money-consuming during initial development.

Common Mobile Use Cases

General mobile camera use cases are diverse. Almost every app that needs to capture, record, or process images or videos falls into this category. The most common mobile use cases include:

- User photo capture;

- Receipt, invoice capture;

- Proof of delivery/work photo;

- Image upload;

- Quick photo/video messaging;

- Before & after photo documentation;

- simple document photo.

In other words, camera functionality has become a baseline feature for most mobile apps.

When you need stability and control, native frameworks are enough.

But when you want to go beyond and add real-time AR, AI, or beautification effects, third-party Camera SDKs have to enter the field off the bench, unlocking the extra functionality.

Camera SDKs for Industrial and Scientific Use

For industrial and scientific use, basic is not enough; it’s time for hardcore camera features to enable computer vision for measurement, monitoring, automation, detection, recognition, and tracking. For these applications, every frame matters, as they demand precise control, automation, real-time processing, scientific-level accuracy, and the full potential of the hardware.

The machine vision market is expected to grow at a CAGR of 13% until 2030, and mobile devices are a big segment with their evolving hardware combined with AI and AR camera features integrated via third-party SDKs.

We reviewed three camera SDKs suitable for these use cases, mainly data streaming, tracking, and robotics.

OpenCV Mobile SDK for Robotics and Computer Vision

OpenCV was launched in 2000, and it is now one of the most established and optimized computer vision SDKs and libraries with a focus on real-time applications. The solution is open source and includes 2500+ algorithms.

The library is cross-platform with C++, Python, and Java interfaces. However, the mobile Camera SKD for Android is available. The iOS integration is via a designated iOS framework on GitHub.

Features

Its core features are a foundation for the computer vision features you’d like to add to your mobile camera when used in industrial and scientific fields:

- Image filtering and camera calibration for perceiving accurate distances;

- Object detection and tracking (including trackers like CSRT), facial and gesture recognition;

- Stereo vision and camera pose estimation for calculating the 3D position of the mobile device or external hardware in the environment;

- The dnn (Deep Neural Networks) module to run pre-trained neural networks (TensorFlow, Caffe, ONNX) on a device for custom object classification, detection, and segmentation;

- Building blocks for custom AR solutions (marker detection and visual odometry).

Scientific and Industrial Use Cases

When skillfully implemented, the OpenCV SDK can be used for non-invasive biological monitoring and medical imaging analysis—for example, heart rate estimation from video, like in this case.

Mobile devices with an integrated OpenCV SDK can be used for control and analysis in industrial automation. For example, automated product inspection and defect detection on a manufacturing line, or real-time object identification and navigation assistance.

Performance and Latency

As the SDK’s core is written in C++, it executes faster, minimizing processing latency. To achieve high FPS in object tracking, developers must implement best practices to avoid excessive memory reallocation and offload input and output operations.

Integration and Community

The initial setup of adding the library/framework to your native application is a task even a junior developer can cope with. However, the effective use of its deep feature set and performance optimization is definitely not a newbie job. It requires a solid understanding of C++ and computer vision concepts.

The OpenCV community is diverse and highly active: a dedicated forum, Slack channels, official books, and a library with tutorials. Besides, the OpenCV University positions itself as a central resource for learning. The provider frequently releases updates, both minor bug fixes and major stuff like new features and modules.

So, integrating and setting up the OpenCV SDK won’t be an issue for an experienced dev team, while aspiring developers will need to step on a learning path using the available official resources.

Google ML Kit for AI-powered Mobile Data Tracking

Google ML Kit is an open-source machine learning Camera SDK for iOS and Android. It aims to bring Google’s expertise to apps, and it seems to work. Unlike the foundational nature of OpenCV, ML Kit is more of an abstract layer that gives developers out-of-the-box APIs for advanced AI tasks.

This Camera SDK combines Google’s computer vision research and mobile-optimized models and runs on devices, meaning that the AI works its magic locally on smartphones or tablets. This ensures low latency and data privacy.

Features

Google ML Kit provides models and APIs that can cover and help automate the majority of data tracking tasks:

- Object detection and tracking with multiple entities in a live camera stream;

- Face and pose detection—mapping human body and face landmarks in real time;

- Barcode scanning and processing with most standard 1D, 2D formats;

- Image labeling to identify objects, activities, locations, etc., with a custom TensorFlow Lite model;

- Text recognition (OCR) and language identification for label reading, inventory numbers, etc.;

As you can see, the SDK’s strength lies more in AI camera capabilities than in AR. However, when combined with ARCore or other AR Camera SDKs, it enables smart AR overlays powered by its own neural models.

Scientific and Industrial Use Cases

ML Kit is one of the best camera SDKs for industrial and scientific fields due to its accuracy, real-time identification and tracking, and offline mode. It can automate the data logging via text recognition to reduce manual entry.

Other use cases include production monitoring, packaging inspection, and defect recognition. In medical establishments, it can count samples, read labels, estimate body posture, etc.

Performance and Latency

On-device AI calculations eliminate network latency. This leads to fast, real-time tracking performance and image processing with low overall latency. All in all, Google ensures that all its models are highly optimized, running on mobile GPUs and dedicated neural processing units.

During image labeling via custom TensorFlow Lite models, the performance depends on model size and signal processing.

Integration and Community

Google did its best to offer simplicity and maximum support. Their solution is both developer-friendly and enterprise-ready.

For integration, developers merely import the necessary libraries (via Gradle for Android or CocoaPods for iOS) and call the high-level APIs. Junior and mid-level developers can successfully implement core tracking features with ease—Google maintains detailed step-by-step guides and sample projects for every feature in the ML Kit documentation.

Models’ testing and debugging are through Firebase ML Model Tester or Android Studio’s built-in ML tools. Updates arrive several times a year, introducing improved accuracy, model compression, and new features such as Generative AI extensions.

If you ever need help, a strong developer community is waiting for you on Stack Overflow, GitHub, Reddit, and Medium. Besides, you can always contact Google’s support.

Structure SDK for 3D Data Streaming and Spatial Tracking

The Structure SDK can turn any Apple and Android device with LiDAR into a high-precision 3D scanner, reconstructor, and spatial-mapping instrument with real-time processing. If a mobile device doesn’t have a sensor, the company offers its hardware, Structure Sensor.

The SDK is commercial, but the pricing is not publicly listed. Developers can obtain an evaluation license to prototype and run internal tests. For product deployment, you have to contact sales.

Features

The core value that distinguishes the Structure SDK from other providers is its ability to handle, process, and synchronize multiple high-bandwidth data streams simultaneously.

- Real-time 3D scanning to capture geometry, color, and texture;

- Depth sensing and meshing to reconstruct accurate 3D meshes from LiDAR or Structure Sensor data;

- Spatial tracking with Visual Inertial Odometry and Simultaneous Localization and Mapping enables calculating 3D position, size, and orientation, and building a map of the surrounding area;

- Integration with ARKit for seamless AR overlays and visualization of scanned models.

Scientific and Industrial Use Cases

When in need of precise 3D geometry and volumetric measurements in scientific or industrial projects, the Structure SDK can be your top choice.

In several of its case studies, it was used in combination with its hardware to perform accurate and non-invasive 3D scans of human limbs to produce prosthetics, and also to create a reliable surface model of a patient’s back for scoliosis treatment.

It can also be used as a front-end sensor solution for mobile robotics and autonomous guided vehicles, providing 3D mapping for complex navigation and obstacle avoidance. In industrial inspections, engineers can scan manufactured parts and infrastructure to detect deviations and compare them with CAD models.

Performance and Latency

When paired with LiDAR or the Structure Sensor, the SDK maintains stable performance with frame delivery typically matching the sensor’s native 30–60 FPS, depending on the device and scene complexity.

Its internal processing pipeline handles real-time meshing, depth fusion, and texture alignment on the GPU, minimizing CPU overhead and keeping the preview responsive even during complex scans.

Integration and Community

The SDK offers detailed documentation and a step-by-step integration tutorial from setting up the project on Android or iOS to the final mesh render. The tutorial provides code examples and lasts two hours.

Although we haven’t found a dedicated official forum or community, there are plenty of threads on Reddit. The technical support is quite responsive, and there are regular updates (although not so frequent as in mass-market SDKs), concentrating on compatibility with new Android and iOS updates and refining the core tracking and meshing algorithms.

Camera SDKs for AR and Video Experiences

Consumers today have AI features in their cameras by default. So in-app camera functionality must deliver immediate engagement, immersion, and a perfect aesthetic vibe and quality.

Since TikTok added its AutoCut feature, as well as impressive face filters and AR effects, users expect no less from other social media platforms or video editing applications. However, augmented reality in applications is not just about funny bunny years anymore. It’s about 94% higher conversion rates through virtual try-on, and 73% of happy users once they interact with it.

We’ve reviewed two Camera SDKs that can turn any application into an engine of content creation and AR experience across industries.

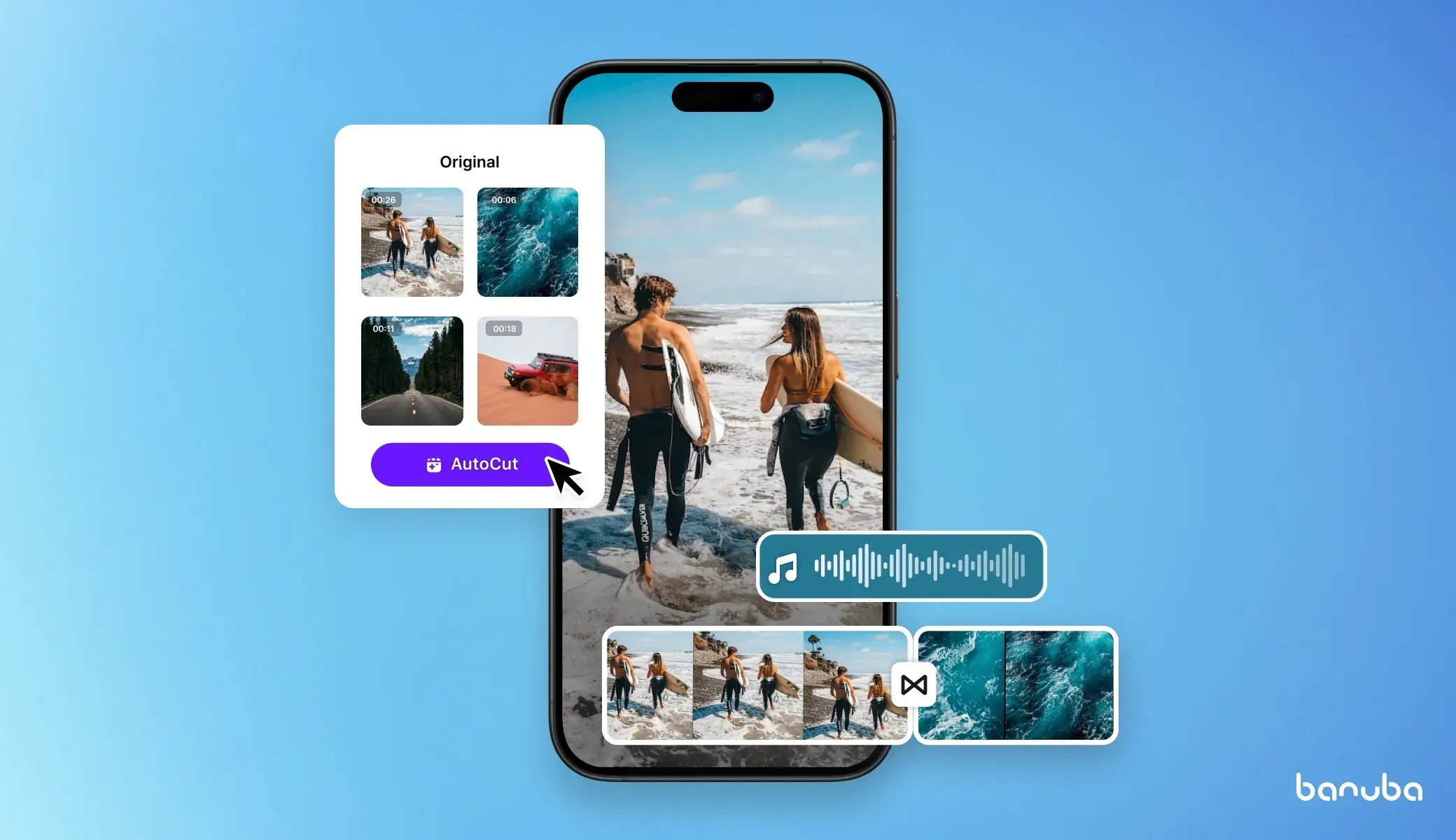

Banuba Camera SDK for Next-gen Video Editing

Imagine replacing your old 8-color Crayola box with a 152-count Ultimate Crayon collection. That’s what Banuba Camera SDK does with your device’s native camera. It powers Android and iOS apps with real-time video recording, editing, and AI-powered AR features. This AR Camera SDK can turn your app into TikTok, CupCut, or both, making it the best for video editing.

Face masks in Banuba Camera SDK

Face masks in Banuba Camera SDK

Features

Banuba Camera SDK enhances the user experience through a rich set of features:

- AI clipping or smart video editing for automatic trimming, merging, and soundtrack synchronization;

- Picture-in-picture mode;

- Next-gen AI background segmentation and replacement, no green screen required;

- Facial feature editing and AR face filters powered by AI;

- Beautification effects to address skin imperfections or apply virtual makeup;

- Video exporting in multiple resolutions and aspect ratios;

- Voice recording and text captions;

- 30+ GB of royalty-free music and music provider integration option;

- Video templates with premade transitions and effects.

Use Cases

Banuba Camera SDK is a go-to toolkit for video editing applications. Apart from obvious integration opportunities, this solution can also enrich social media applications, social commerce platforms, and entertainment apps.

It can even be incorporated into video-calling platforms to enhance privacy through background replacement. For educational and corporate tools, it can be used for implementing video tutorials or presentations.

Banuba Face AR SDK for AR Experiences

Banuba Face AR SDK gives you AR camera, face tracking, and virtual try-ons all under one hood. Powered by patented technology, it reconstructs a 3D face mesh using 3,308 vertices, tracks multiple faces simultaneously, and operates for up to 7 meters away.

It’s built to capture, analyze, and animate faces under almost any conditions: extreme angles, low-light environments, 70% facial occlusion, 360-degree camera rotation, you name it.

Features

Banuba Face AR SDK gives developers an entire creative toolkit for face-based AR:

- Snapchat-like filters, AR lenses, and real-time facial animation;

- Face morphing and reshaping for interactive and stylistic effects;

- Triggers, game filters, and gesture-based interactions (e.g., smile to start an effect);

- Virtual background replacement for live streaming or video calls;

- Avatar creation and 3D character animation, mapped to the user’s expressions in real time;

- LUTs (color filters) for cinematic post-processing and video consistency;

- Beautification tools featuring skin smoothing, lighting correction, and facial contouring;

- Makeup simulation with precise color blending, shine, and texture reflection;

- Hair color try-on with adjustable tones and natural shading;

- DIY effect creation using Banuba Studio, and an 1,000+ ready-to-use AR effects and custom templates in the Asset Store.

Use Cases

Banuba Face AR SDK’s use cases are limitless. It can be applied in any field where facial AR filters bring value. And it’s far beyond fun; it engages users through expression, personalization, and a little bit of play.

In beauty-tech and retail, it enables real-time virtual try-on for makeup, glasses, lenses, jewelry, hair colors, letting customers experiment before they buy. According to our internal benchmarks, it can increase user engagement by 200% and boost conversion by 300%.

For video communication and streaming apps, it adds an extra layer of fun and identity through avatars, masks, and 3D facial animation. Besides, it helps to be prepared for unexpected meetings through beautification filters and background replacement.

And in marketing and brand activations, it powers interactive campaigns, mini-games, and branded effects that boost engagement and audience reach. Well, and in social media and UGC apps, they help create shareable content and inspire creativity.

Performance and Latency

Since both SDKs are in the same ecosystem, it makes sense to describe their performance, latency, and integration under a single section.

Both the Banuba AR Camera SDK and the Face AR SDK are processed on devices. Results? Minimal latency and smooth rendering even on mid-tier smartphones. According to Banuba’s internal tests, it runs on 90% of Apple and Android devices.

Performance benchmarks show stable 30+ FPS under typical mobile load, with dynamic CPU/GPU allocation balancing performance and power consumption. Banuba’s video and effect pipelines are tuned for high throughput, allowing simultaneous face tracking, filter rendering, and background segmentation without dropped frames or visual artifacts.

Community and Support

Banuba’s developer ecosystem is diverse, from a dedicated community portal to Medium discussions. Besides, they provide tech support if any issues or questions arise. The Regular updates expand SDK functionality, adding new modules, optimizing neural networks, and improving effect rendering performance.

A 14-day free trial license is available for testing, with flexible commercial licensing options for both SMEs and enterprises. As a bonus, if you decide to scale your business, both the Banuba AR Camera SDK and the Face AR SDK are cross-platform (Flutter and React Native).

Integration

Banuba made its integration almost impossible to mess up. Both SDKs are modular, well-documented, and include demo apps and sample code on GitHub.

You can review the documentation with step-by-step tutorials here:

The integration process begins with obtaining a token from the team. Both SDKs can be added in no time through Gradle for Android or CocoaPods for iOS. Setup follows the same logic across platforms: import the SDK, initialize with a client token, and start the camera.

Banuba Camera SDK for Android Integration

- Add the Banuba repository and dependencies in build.gradle.

- Initialize the SDK with your client token in the Application class.

- Create or reuse a camera fragment to start recording and apply effects.

You can review the relevant repositories for Android integration on GitHub and follow the detailed documentation on the official portal.

Banuba Camera SDK for iOS Integration

- Add the SDK via CocoaPods or Swift Package Manager.

- Initialize Banuba in AppDelegate with your client token.

- Use the provided CameraViewController to start capturing video or apply filters.

The official GitHub account provides Banuba’s Camera SDK code samples, and a step-by-step integration guide is described in the documentation.

Camera SDKs for Smart Home and IoT

Camera SDKs for smart home and IoT ecosystems must ensure real-time monitoring, motion detection, and device compatibility—from video doorbells to surveillance systems. Low latency and reliable connectivity should be key priorities.

We reviewed two Camera SDKs that can power such experiences.

Tuya Smart Camera SDK

Tuya Smart Camera SDK is built for developers creating surveillance, motion detection, and connected-camera features for smart home applications. It supports multi-lens cameras, dash cameras, doorbells, PTZ and cube cameras, and 4G cameras. Basically, it provides APIs for camera streaming, two-way audio, and event triggers.

Source: developer.tuya.com

Source: developer.tuya.com

Features

- Live video streaming and cloud recording with support for multiple video formats;

- Two-way audio and video (microphone and speaker control; camera and mobile app) for interactive devices like doorbells or intercoms;

- Motion detection and alerts, allowing developers to trigger in-app notifications or actions when movement occurs;

- PTZ control (pan-tilt-zoom) for cameras supporting mechanical movement;

- Smart-home automation integration, linking camera events (e.g., motion detection) to other Tuya-powered devices such as lights, alarms, and smart locks;

- Device control for managing the camera’s lens and selecting the area, image, or night vision.

Each module is modular, meaning developers can easily embed or exclude features, speeding up app development and avoiding unnecessary overhead.

Smart Home and IoT Use Cases

The Tuya ecosystem was built to serve the smart homes and surveillance industries. You can integrate it into smart doorbells that trigger when motion or proximity is detected or into surveillance systems to display multiple camera feeds. It’s also a win-win scenario for pet or baby monitors—live video for control and observation, and two-way communication.

Performance and Latency

Tuya’s SDK delivers high-throughput streaming with latency typically below one second in modern network conditions. This is achieved through edge optimization and Tuya Cloud routing, which balance data between device, app, and cloud services for consistent real-time performance.

Community and Integration

Integration involves registering devices in Tuya’s IoT console, initializing SDK credentials, and adding modules in Android or iOS projects. The dedicated Tuya Developer Portal provides documentation, sample projects, and community forums.

The SDK releases frequent updates, primarily focused on new device protocols and hardware. Tuya offers a free development tier, but commercial use requires a paid edition or licenses per device. According to the official data, the commercial edition costs $5,000 per year.

Agora IoT SDK

Agora IoT SDK extends Agora’s real-time communication infrastructure to IoT devices — powering low-latency video and audio streaming for cameras, doorbells, and other smart systems. It focuses on edge-to-cloud connectivity and real-time streaming performance, making it ideal for interactive IoT systems that need fast event response.

Source: agora.io

Source: agora.io

Features

- Ultra-low latency (< 300 ms) live video and audio streaming between IoT cameras, apps, and cloud systems;

- Cross-platform SDKs (Android, iOS, Web, and embedded C/C++) for firmware integration;

- Built-in device management and support for common camera protocols like RTSP and RTMP;

- Peer-to-peer and cloud relay modes;

- Multi-party and multi-device live voice and video streaming, allowing developers to build complex home or industrial IoT ecosystems.

Smart Home and IoT Use Cases

The Agora IoT SDK is perfect for systems that require instant visual feedback and control. Smart doorbells and intercoms? Multi-camera monitoring systems? Industrial IoT? Transportation networks? Yes, please. It’s there when latency, reaction, action, and synchronization are critical.

Performance and Latency

Agora, the IoT SDK footprint is less than 400 KB after integration, so system resource usage is minimal. It supports the transfer speed of up to 50 Mbps per user and provides seamless recovery from up to 50% packet loss. The SDK also boasts low memory usage, with less than 2 MB during simultaneous sending and receiving of 320×240 H.264 video.

Community and Integration

The Agora Camera SDK offers comprehensive documentation, including step-by-step instructions, FAQs, and a dedicated developer community on Discord. The integration itself is lightweight and easy—import the SDK, configure the channel parameters, and start the stream.

Regular updates mainly target codec support and security features improvements. There is a free tier with limited features, while the standard plan starts at $1,200 per month. Custom enterprise pricing is available upon request.

Why Banuba SDKs Lead in AR and Video

Banuba’s Camera SDK and AR Face SDKs lead the AR and video markets thanks to its powerful technological foundation. It’s a diverse ecosystem that offers Face Tracking, AR Camera, and Virtual Try-on SDKs in a single solution. Compared to other similar software on the market, Banuba offers the following advantages:

- Simple integration, that sometimes takes less than 8 minutes;

- Small size on mobile, adding only 25MB on average to the download;

- Patented technology skips the identification of 2D points. Instead, we create a full 3D head model directly, achieving higher precision and stability;

- The hybrid AI architecture combines CNNs, GANs, and custom neural layers to cut execution time and ensure accurate performance on 90% of smartphones;

- All complex Computer Vision models (e.g., tracking, segmentation) run locally on the user's device. This ensures ultra-low latency (approx. 10ms) and maintains high frame rates (up to 60 FPS), ensuring reliable, high-quality AR experiences;

- Zero personal data is ever transmitted to the Internet. Nothing is gathered, and all the processing is done on users’ devices. This simplifies compliance with global regulations (GDPR, CCPA) and builds user trust;

- Banuba has a cross-platform core, meaning you can start small on one platform and expand without hassle, offering the same user experience in all ecosystems. Banuba’s SDKs support Android, iOS, Flutter, React Native, Mac, Windows, Unity, and Web.

Business metrics speak louder than promises. Banuba’s SDKs bring qualitative and quantitative benefits to its customers. Integrating its solutions can save 50% on development time, resulting in lower expenses and a faster path to launch. The video editing apps that use its SDK reach over 20M downloads, and user engagement with its AR features exceeds 15 M/month.

In eCommerce, Banuba’s Virtual Try-on SDK can increase the average add-to-cart rate by 600%, and our internal benchmarks show that the return rate can decrease by 60%.

Choosing the Right Camera SDK

If we overwhelmed you with information, here’s a brief checklist to help you choose the best Camera SDK for your needs. It includes matters you have to consider:

- Platform compatibility. Is the SDK available for the platforms you are targeting? Put strategic thinking into action and analyze potential future growth and additional platforms coverage.

- Performance. Check benchmarks, documentation for CPU/GPU usage, and offline modes. Ensure low latency.

- Features. Choose beyond basic. Even if you don’t need AR and AI today, think ahead to avoid messy migration and provider shifts in the future.

- Integration and documentation. Before committing, study the available resources to evaluate your tech input estimate. The simpler the integration, the faster your app ships. Look for SDKs with step-by-step guides, sample projects, and active developer communities.

- Licensing. Transparent pricing and flexible licensing are a top choice. Check for hidden costs like per-minute usage, per-device licensing, or revenue-sharing.

When to Choose Banuba?

Choose Banuba SDKs when your app’s value depends on camera engagement and real-time visual experiences. If your roadmap includes any of the following, Banuba is your go-to solution:

- AR filters, lenses, avatars, or beautification features;

- Background removal and segmentation for video calls or editing;

- Virtual try-on in fashion, accessories, or cosmetics;

- Smart video editing and automatic scene clipping;

- Cross-platform expansion with consistent visual quality.

Conclusion

In short, the camera SDK market can cover it all: from basic photo and video capture to high-precision industrial solutions and real-time AR powerhouses.

- general SDKs keep things simple and stable;

- industrial and scientific ones push the limits of computer vision;

- AR and video SDKs turn any mobile device into a creative engine;

- IoT SDKs connect our homes and devices, powering them with eyes.

When your business needs innovation, creative freedom, and growth potential, Banuba SDKs take the lead. Their on-device AI processing, cross-platform compatibility, face tracking, upscale AR feature, and privacy-first design give developers a tool to build AR-driven apps without reinventing the wheel.

Try Banuba Camera SDK free for 14 days and see how easily next-gen AR and video features fit into your app.

Reference List

Agora.io. (n.d.). IoT SDK. Retrieved October 27, 2025, from https://www.agora.io/en/products/iot-sdk/

Apple Inc. (n.d.). AVFoundation framework documentation. Retrieved October 27, 2025, from https://developer.apple.com/documentation/avfoundation

Banuba. (n.d.). AI face recognition SDK. Retrieved October 27, 2025, from https://www.banuba.com/face-recognition-sdk

Banuba. (n.d.). AI clipping video editor SDK. Retrieved October 27, 2025, from https://www.banuba.com/blog/ai-clipping-video-editor-sdk

Banuba. (n.d.). Banuba Community. Retrieved October 27, 2025, from https://community.banuba.com/

Banuba. (n.d.). Banuba GitHub repository. Retrieved October 27, 2025, from https://github.com/Banuba

Banuba. (n.d.). Bermuda Face AR SDK case. Retrieved October 27, 2025, from https://www.banuba.com/blog/bermuda-face-ar-sdk-case

Banuba. (n.d.). Camera SDK. Retrieved October 27, 2025, from https://www.banuba.com/camera-sdk

Banuba. (n.d.). Full guide to AR in eCommerce. Retrieved October 27, 2025, from https://www.banuba.com/blog/full-guide-to-ar-in-ecommerce

Banuba. (n.d.). Oceane success story. Retrieved October 27, 2025, from https://www.banuba.com/blog/oceane-success-story

Banuba. (n.d.). Over 20M downloads: Videoshop success story. Retrieved October 27, 2025, from https://www.banuba.com/blog/over-20m-downloads-videoshop-success-story

Banuba. (n.d.). Video Editor SDK WEAT case study. Retrieved October 27, 2025, from https://www.banuba.com/blog/video-editor-sdk-weat-case-study

Banuba. (2025, October 10). Banuba unveils next-generation AI for flawless virtual backgrounds [Press release]. Business Wire. https://www.businesswire.com/news/home/20251010633225/en/Banuba-Unveils-Next-Generation-AI-for-Flawless-Virtual-Backgrounds

Google. (n.d.). Camera2 API reference. Retrieved October 27, 2025, from https://developer.android.com/reference/android/hardware/camera2/package-summary

Google. (n.d.). CameraX release notes. Retrieved October 27, 2025, from https://developer.android.com/jetpack/androidx/releases/camera

Google. (n.d.). ML Kit guides. Retrieved October 27, 2025, from https://developers.google.com/ml-kit/guides

Grand View Research. (2023). Machine vision market report, 2023–2030. Retrieved from https://www.grandviewresearch.com/industry-analysis/machine-vision-market

Grand View Research. (2024). Artificial intelligence (AI) camera market report, 2024–2030. Retrieved from https://www.grandviewresearch.com/industry-analysis/artificial-intelligence-ai-camera-market-report

OpenCV. (2023). OpenCV applications in 2023. Retrieved from https://opencv.org/blog/opencv-applications-in-2023/

OpenCV. (n.d.). OpenCV documentation. Retrieved October 27, 2025, from https://docs.opencv.org/3.4/da/d2a/tutorial_O4A_SDK.html

OpenCV. (n.d.). OpenCV official website. Retrieved October 27, 2025, from https://opencv.org/

PubMed Central. (2021). Machine vision in industrial applications [Article]. PMC. https://pmc.ncbi.nlm.nih.gov/articles/PMC8533103/

Shopify. (n.d.). 3D eCommerce: How to bring products to life online. Retrieved October 27, 2025, from https://www.shopify.com/blog/3d-ecommerce

Structure.io. (n.d.). Backscnr case study. Retrieved October 27, 2025, from https://structure.io/case-studies/backscnr/

Structure.io. (n.d.). Rise Bionics case study. Retrieved October 27, 2025, from https://structure.io/case-studies/rise-bionics/

Structure.io. (n.d.). Structure SDK overview. Retrieved October 27, 2025, from https://structure.io/structure-sdk/

Structure.io. (n.d.). Structure SDK tutorials. Retrieved October 27, 2025, from https://developer.structure.io/docs/tutorials/tutorials/

Threekit. (2023). 23 augmented reality statistics you should know in 2023. Retrieved from https://www.threekit.com/23-augmented-reality-statistics-you-should-know-in-2023

Tuya Smart. (n.d.). Tuya IoT app development overview. Retrieved October 27, 2025, from https://developer.tuya.com/en/docs/app-development/overview

-

The Banuba Camera SDK is the best choice for Android if your app needs more than just capturing a picture. It provides real-time AR filters, AI-driven beautification, background replacement, and smart video editing. On-device processing ensures low latency and data privacy. Banuba’s SDK is easy to integrate via Gradle and runs smoothly across most Android devices, maintaining a stable 30+ FPS even with multiple active effects.

-

The most advanced option is Banuba Face AR SDK. It delivers real-time 3D face tracking, AR filters, beautification, and virtual try-ons, all processed on-device for low latency and privacy. Unlike most competitors, Banuba’s proprietary Face Kernel™ builds a full 3D head model directly, achieving unmatched accuracy across 90 % of smartphones.

-

For smart-home integration, the Tuya Smart Camera SDK and the Agora IoT SDK are excellent choices. They power IP-camera streaming, motion detection, and two-way audio while maintaining high connectivity and adaptive bitrate streaming.

-

Banuba’s SDKs run all AI and AR computations on the device, not in the cloud. This means lower latency, stronger privacy, and full performance even offline. Their modular architecture covers multiple use cases: from video editing and live filters to e-commerce try-ons. Banuba's SDK is compatible with all major platforms: Android, iOS, Web, Flutter, React Native, and Unity.

-

Most SDKs offer native libraries for Android and iOS).

You’ll typically:

- Import the SDK package into your project.

- Initialize it with a client key or license.

- Configure permissions and UI components (camera view, effects, or streams).

- Run sample projects provided in the official docs.

For example, Banuba provides ready-to-use integration samples on GitHub, helping developers launch a working demo in under an hour.