[navigation]

TL;DR:

- A virtual background lets users swap their real environment for a digital one during recording or calls;

- Background segmentation separates people from their surroundings using AI, no green screen required;

- Implementation varies depending on the approach and differs in accuracy, performance, and hardware impact;

- Choose a trusted app like Banuba, Covideo, or Hippo Video for reliable mobile virtual background recording;

- Custom development gives full control but requires more time, budget, and in-house expertise;

- Using an SDK like Banuba’s cuts development time to under a week and includes features like AR effects, cross-platform support, and on-device processing.

What Is a Virtual Background in Video Recording?

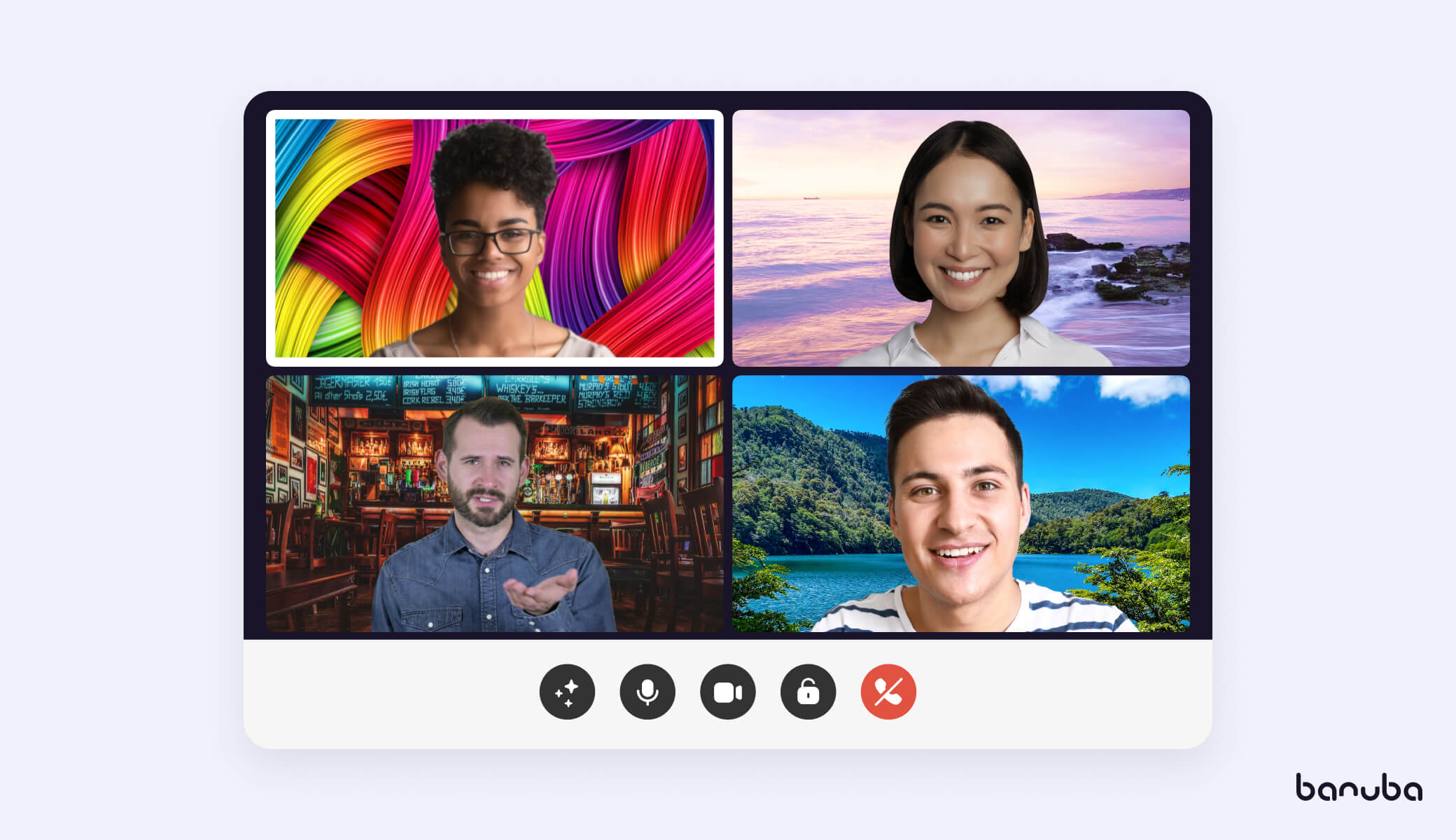

A virtual background is a feature that replaces your actual surroundings in a video with a digital image or video. Virtual backgrounds help create a more polished, distraction-free visual experience without needing a physical backdrop or dedicated studio, making them ideal for video conferencing, recording tutorials, filming content, or creating social media reels.

Virtual background from Banuba

Virtual background from Banuba

Use Cases

Virtual backgrounds have become an essential feature for:

- Remote professionals who want to maintain privacy or present a more professional look on video calls;

- Content creators aiming for aesthetic or thematic consistency in their videos;

- Educators and streamers who want to personalize their environment or eliminate visual noise;

- Brands and businesses that want to reinforce brand identity across team videos or customer interactions.

Green Screen vs. AI-Based Virtual Backgrounds

Traditionally, virtual background changers in video required a green screen, a chroma key setup that demands uniform lighting and post-processing skills. While effective, it’s far from convenient for everyday users.

Modern virtual backgrounds, on the other hand, rely on AI-powered background segmentation. These solutions can distinguish the user from their surroundings in real-time without the use of green screens.

Key Takeaways

- A virtual background lets users swap their real environment for a digital one during recording or calls;

- AI-powered solutions eliminate the need for a green screen, making the experience accessible and instant;

- Use cases range from work calls and content creation to brand storytelling and education.

How Does Virtual Background Technology Work?

Behind the simplicity of a one-tap virtual background lies a sophisticated blend of computer vision, AI, and deep learning, which forms the basis of background segmentation algorithms. Let’s dive into it.

Background Segmentation: The Core Engine

The foundation of virtual background technology is background segmentation. This process uses machine learning models to identify and isolate the foreground (you) from the background (your environment).

Unlike traditional chroma keying, this approach works without color-based markers and relies on deep learning models trained to understand human shapes, motion, and visual context.

Here’s how background segmentation works under the hood:

1. Input Acquisition

The app captures a real-time video stream from the front or rear camera. This video is broken down into frames that are processed individually or in batches, depending on the architecture.

2. Preprocessing & Normalization

Each frame undergoes normalization:

- Color correction and brightness balancing to account for different lighting conditions;

- Resizing to reduce computational load while preserving subject detail;

- Optional use of face detection or pose estimation to center the subject.

3. Neural Network Inference (Segmentation Model)

The frame is fed into a deep learning model — typically a lightweight convolutional neural network (CNN) or transformer-based segmentation model optimized for edge devices.

These models are trained on thousands of annotated images to:

- Detect the presence of a human figure;

- Predict a binary or multi-class segmentation mask (foreground vs. background);

- Preserve fine details (like hair strands, glasses, and fingers) using edge-aware refinement.

4. Post-Processing and Mask Refinement

Raw segmentation masks are rarely perfect, so additional steps are applied:

- Temporal smoothing to stabilize the mask across frames;

- Edge softening to eliminate jagged transitions;

- Morphological operations (e.g., dilation and erosion) to clean up mask artifacts.

5. Background Replacement and Compositing

Once the clean foreground mask is generated:

- The chosen background (image or video) is layered beneath the user’s segmented image;

- Real-time compositing is done using GPU acceleration;

- Dynamic lighting and color blending are applied to match the background to the user’s environment, minimizing visual disconnect.

6. Output Rendering

The final video of a user with a virtual background is rendered in real time and displayed or recorded. Thanks to GPU-based acceleration and model compression, this entire pipeline runs at up to 30–60 FPS on modern smartphones with minimal CPU usage.

AI-Based Segmentation vs. Traditional Chroma Key: Implementation Differences

Traditional chroma keying and AI-based segmentation are two primary methods for removing or replacing the background in videos. Both aim to isolate the subject from the background, but they differ in their impact on accuracy, performance, and device efficiency.

Chroma key uses color detection (usually green) to remove backgrounds. It works well under controlled studio conditions but struggles in real-world scenarios. Shadows, color spill, or green clothing can easily break the effect, making it unsuitable for mobile-first or casual environments.

AI segmentation, on the other hand, uses deep learning to identify people in a frame based on shape, movement, and context, not color. This method delivers significantly better accuracy across diverse conditions, handling details such as hair and accessories, even in suboptimal lighting.

In terms of performance, chroma key requires minimal processing power but often demands post-production to clean up artifacts. AI-based segmentation processes each frame through a neural network, which is more resource-intensive. However, with optimization (model compression, GPU acceleration, and edge inference), modern SDKs can run smoothly at 30–60 FPS even on mid-tier smartphones.

As for battery and CPU/GPU usage, chroma keying is light, while AI segmentation draws more power. But with mobile-ready frameworks like TensorFlow Lite or CoreML, and efficient pipelines like Banuba’s rendering, the difference disappears, making real-time virtual background replacement viable even on the go.

Chroma Key vs. AI Segmentation: Implementation Comparison

AI-based solutions, like Banuba’s, remove all the barriers and disadvantages the chroma key approach has:

- No setup: just launch and go;

- Real-time rendering: no editing required;

- Cross-device support: mobile, desktop, web;

- Better scalability: ideal for app developers and teams on the go.

Key Takeaways

- Background segmentation separates people from their surroundings using AI, no green screen required;

- Implementation varies depending on the approach and differs in accuracy, performance, and hardware impact;

- Compared to chroma key setups, AI-based solutions are easier, faster, and more accessible.

Apps for Recording Video with Virtual Background

Features, pricing, and usability of apps promising virtual background capabilities vary, especially when it comes to mobile readiness and real-time performance. We’ve already reviewed a list of top solutions, and below is a breakdown of the market leaders:

1. Hippo Video

Hippo Video

Hippo Video

Hippo Video offers both real-time virtual backgrounds and post-production editing, making it a flexible choice for business users and marketers. Users can record with a background applied live or edit it afterward.

- Platforms: Web, mobile (limited), Chrome extension;

- Use case: Sales outreach, tutorials, internal comms;

- Pricing: Starts at free for basic features; advanced features require a higher-tier plan, starting from $20/month;

- Pros: Integrated with CRMs and email tools;

- Cons: Limited real-time options on mobile.

2. Covideo

Covideo virtual background

Covideo virtual background

Hippo Video offers both real-time virtual backgrounds and post-production editing, making it a flexible choice for business users and marketers. Users can record with a background applied live or edit it afterward.

- Platforms: Web, mobile (limited), Chrome extension;

- Use case: Sales outreach, tutorials, internal comms;

- Pricing: Starts at free for basic features; advanced features require a higher-tier plan, starting from $20/month;

- Pros: Integrated with CRMs and email tools;

- Cons: Limited real-time options on mobile.

3. Virtual Stage Camera

Virtual Stage Camera

Virtual Stage Camera

This mobile-first app focuses on real-time background replacement, often used by streamers and creators. It uses camera overlays and supports video backgrounds.

- Platforms: iOS and Android;

- Use case: Social video, live streaming;

- Pricing: Free basic version with in-app purchases;

- Pros: Lightweight, easy to use;

- Cons: Limited resolution and timing output on the free tier.

Other Solutions

Depending on your needs, you might also explore:

- Background video recorder apps are designed for stealth or discrete background capture. They are often used for compliance or passive monitoring;

- Off-screen video recorders are ideal for remote teams needing distraction-free presentations, often with background blur or static imagery.

Key Takeaways

- Hippo Video and Covideo serve business and marketing users with real-time and post-recording features;

- Virtual Stage Camera is ideal for content creators who prioritize mobile devices;

- Many niche tools exist for off-screen or stealth video needs, but lack broad appeal.

How to Record a Video with Virtual Background on Android & iOS

Below is a brief, step-by-step guide for recording with a virtual background on mobile devices.

Choosing the Right App

Start by picking a mobile app that offers real-time virtual background support for your OS:

- Banuba: Offers real-time virtual background features with high-quality segmentation, AR overlays, and SDK support for app builders;

- Covideo: Great for quick video messages with custom-branded backgrounds;

- Hippo Video: Useful for asynchronous content and marketing videos, with limited mobile functionality;

- Virtual Stage Camera: Focused on live content creation and streaming.

Install and Set Up

Download the app from the App Store or Google Play. Once installed:

- Allow camera and microphone access;

- Adjust any default settings like resolution, frame rate, or output format;

- If you're using Banuba’s SDK demo, you may be prompted to test different virtual backgrounds or enable AR filters.

Select or Upload Your Background

Most apps offer a library of preloaded backgrounds (images and videos), plus the ability to upload your own.

- Choose a neutral background for professional use;

- Pick thematic or animated visuals for creative or branded content;

- Test how the background interacts with your appearance—avoid high-contrast visuals that clash with your clothing or hair.

Recording Tips for Best Results

Background replacement from Banuba

Background replacement from Banuba

To get the most from your mobile setup:

- Lighting: Use soft, diffused front lighting. Avoid strong backlight or shadows;

- Positioning: Keep your face centered and maintain a stable frame. Prop your phone on a stand or use tripod mode;

- Privacy: Review app permissions to ensure no background data is shared without consent;

- Audio: Use a headset mic or external microphone for better sound clarity.

Key Takeaways

- Choose a trusted app like Banuba, Covideo, or Hippo Video for reliable mobile virtual background recording.

- Setup is fast, but granting permissions and checking resolution settings improves output.

- Utilize high-quality lighting, stable framing, and visually appealing background elements for optimal results.

- For professional use, test how your visuals appear with your chosen background before hitting record.

How to Add a Virtual Background Feature to Your Own App

The ability to record a video with a virtual background is no longer a nice-to-have feature for your app; it’s expected. There are two main paths to bring virtual backgrounds into your app:

Option 1: Build from Scratch

Developing your virtual background engine might sound appealing in terms of complete control, but it’s complex and resource-intensive.

Pros:

- Total control over everything, from the selection of algorithms to the record button color. You are limited only by the technology and your budget.

- Cheaper in the long run than using licensed software. Monthly or yearly payments add up, while the proprietary software will start paying for itself.

Cons:

- More expensive in the short term because you have to invest a lot of money in development before seeing any results.

- Longer time-to-market, as you need to develop every feature from scratch instead of using a premade SDKs/APIs.

- Fewer features from the beginning – once again, they need to be built first, while an SDK will already have more functionalities than just virtual background (trimming, upload to YouTube, visual effect arrays, etc.).

Unless you’re a large tech company with an in-house computer vision and video processing team, this route rarely justifies the effort. Making an app record video with virtual background isn’t cheap: a single computer vision specialist can command a salary of over $100,000/year.

Option 2: Use a Virtual Background SDK

A smarter and faster alternative is to integrate a ready-made SDK or API. This saves time, reduces risk, and ensures your app reaches the market faster with a polished and stable solution.

An SDK is a pre-made module that can perform specific functions (such as replacing a green screen, inserting virtual backgrounds, recording videos, adding music, etc.) and is designed to be quickly integrated into a market-ready project or one still in development.

An API in this context is a similar product that is more customizable at the cost of having fewer features.

These are the advantages of using an SDK/API:

- Shorter time-to-market. With an SDK, your users will be able to record video with a virtual background after a week of work max. Developing it from scratch will take months. In addition, SDKs might have native integrations with other SDKs, so you’ll be able to quickly acquire large chunks of functionality and integrate them with no problems. This approach can save up to 50% total development time.

- Cheaper in the short term. A yearly license for an SDK is much less expensive than hiring a development team and paying its salaries while it works to develop the same feature set.

- Less risk. If you invest less money and time in releasing your video recording app, you don’t stand to lose as much, should it fail to become a hit.

However, there are also drawbacks:

- More expensive in the long run. Over the years, the license payments will make the total cost of ownership be higher than with a fully-custom app.

- Vendor lock-in. Once you install a virtual background SDK to record a process your videos, switching to another one would be challenging.

Banuba’s Virtual Background SDK is a standout option, offering:

- Integration time: 1 week or less;

- Cross-platform support: Android, iOS, Windows, and Web;

- Real-time performance even on low-range devices;

- Detailed guidance and documentation;

- Built-in AR filters and beautification effects;

- Access to 1000+ digital assets via Banuba's Asset Store;

- A Web Studio for creating custom filters and effects;

- Fully white-label & customizable for brand alignment;

- Privacy-first architecture: No user data leaves the device.

- Support for cross-platform mobile frameworks, e.g., Flutter Video Editor and React Native.

- A suite of core video editing features: trimming, music editor, etc.

- Banuba’s SDK also powers solutions for AR conferencing, expanding use cases beyond recording to include live virtual meetings, branded video calls, and more.

“Banuba’s SDK saved us months of R&D. The segmentation quality is on par with enterprise video tools. It worked on our first test device without a single frame drop.” - Alex M., Lead Mobile Developer at a SaaS video startup

Technical Requirements for Android & iOS

In this section, we will guide you through the process of recording video with a virtual background in your app in just a few simple steps. With this, you will also be able to process existing videos, start recording them from the app, and add various effects to your recordings.

This is what you’ll need:

Android

- The latest Banuba Video Editor and Face AR SDK releases

- Java 1.8+

- Kotlin 1.4+

- Android Studio 4+

- Android OS 6.0 or higher with Camera 2 API

- OpenGL ES 3.0 (3.1 for Neural networks on GPU)

iOS

- The latest Banuba Video Editor and Face AR SDK releases

- iPhone devices 6+

- Swift 5+

- Xcode 13.0+

- iOS 12.0+

Note that both SDKs are needed to use background replacement in the video editor. Combined, they will occupy 80.5 MB of space on Android and 42 MB on iOS.

To get the releases, just send us a message through a contact form.

iOS

The step-by-step instructions and code samples can be found on

our GitHub page. There, you will also see a list of dependencies, technical details, customization options, and more. We also have an FAQ that will answer any questions you may have.

Android

To get the virtual background feature on Android, go to the relevant

GitHub page. As with iOS, there are also dependencies, supported media formats, recording quality parameters, and customization options. And this is the FAQ for Android.

Hybrid

Banuba Video Editor SDK supports Flutter and React Native. Click the framework you are interested in to see the guide.

Key Takeaways

- You can either build your own virtual background engine or integrate a ready-made SDK: each has pros and cons;

- Custom development gives full control but requires more time, budget, and in-house expertise;

- Using an SDK like Banuba’s cuts development time to under a week and includes features like AR effects, cross-platform support, and on-device processing;

- Banuba’s solution supports Android, iOS, Flutter, and React Native, with detailed docs and GitHub guides for fast implementation;

- SDKs may lead to vendor lock-in and higher long-term costs, but they reduce short-term risk and speed up launch.

Tips for High-Quality Video Recording with Virtual Background

Even the most advanced AI background segmentation relies on decent lighting, framing, and audio conditions to deliver top-tier results. Here are some tips to take your video recording with a virtual background to the next level.

Optimize Lighting and Camera Position

Lighting is the foundation of clean segmentation even for AI:

- Use soft, front-facing light sources (such as a ring light or window). Avoid backlight;

- Avoid overhead or side shadows that can confuse the background detection;

- Position your camera at eye level and about an arm’s length away for a natural frame.

Stable lighting makes it easier for the software to cleanly separate you from your environment.

Choose the Right Background

The best virtual background is the one that enhances, not distracts from your message:

- Static images: Great for business calls or minimalistic branding;

- Video backgrounds: Eye-catching, but should be subtle and smooth.

- Branded visuals: Use logos, brand colors, or themed environments to reinforce identity;

Avoid cluttered visuals, bright neon colors, or high-contrast patterns that can overpower the subject.

Mind Your Audio and Environment

Your background may look great, but audio still matters:

- Use a headset mic or lavalier to avoid room echo;

- Record in a quiet room with soft furnishings (such as curtains and carpets) to minimize reverb;

- Close windows and turn off background noise sources.

Good sound makes your video feel professional, even if you're filming on a smartphone.

Avoid Common Mistakes

Even great apps can struggle if users make basic errors:

- Don’t wear clothes that match your background. It can cause “invisibility” effects, meaning your T-shirt can blend in with your wall, turning you into Harry Potter the first time he put on the invisibility cloak;

- Avoid rapid body movements, especially hand gestures near the face;

- Keep your background choice consistent with your message. A tropical beach might not work for a corporate sales pitch, but it might work for a vacation announcement.

Test your setup before hitting record, especially if you're using a virtual background for professional content.

Key Takeaways

- Soft, consistent lighting helps AI segmentation work effectively;

- Use backgrounds that suit your purpose—professional, branded, or creative;

- Prioritize a good audio setup with an external mic and a quiet environment;

- Avoid visual clashes and fast motion to reduce segmentation artifacts.

Reference List

Banuba. (n.d.). Background subtraction guide. https://www.banuba.com/blog/background-subtraction-guide

Banuba. (n.d.). Background subtraction in a nutshell. https://www.banuba.com/blog/background-subtraction-in-a-nutshell

Banuba. (n.d.). Virtual background changer: What it is and how it works. https://www.banuba.com/blog/virtual-background-changer-what-it-is-and-how-it-works

Banuba. (n.d.). Virtual background for video conferencing. https://www.banuba.com/blog/virtual-background-for-video-conferencing

Covideo. (n.d.). Covideo. https://www.covideo.com/

Fritz AI. (2020, October 5). Changing image backgrounds using image segmentation and deep learning. https://fritz.ai/changing-image-backgrounds-using-image-segmentation-deep-learning/

Grid Dynamics. (2021, April 13). Virtual background for video conferencing: Technical challenges and implementation. https://www.griddynamics.com/blog/virtual-background

Hippo Video. (n.d.). Hippo Video. https://www.hippovideo.io/

Lifewire. (2024, March 10). Why videoconference fatigue is real—and how to reduce it. https://www.lifewire.com/videoconference-fatigue-8717575

Raconteur. (2023, October 2). The meeting fatigue epidemic: Why we're all so tired. https://www.raconteur.net/talent-culture/meetings-research

Recraft AI. (2024, June 12). A complete guide to virtual backgrounds. https://www.recraft.ai/blog/virtual-backgrounds-guide

Roland. (n.d.). Virtual stage camera. https://www.roland.com/us/products/virtual_stage_camera/

ZebraCat AI. (2024, February 14). 30+ video conferencing statistics you need to know in 2024. https://www.zebracat.ai/post/video-conferencing-statistics

Zhou, Y., Wang, W., & Zhang, T. (2024). Videoconferencing fatigue in remote work: Cognitive load and visual overstimulation. Frontiers in Psychology, 15, Article 1408481. https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2024.1408481/full

Virtual background from Banuba

Virtual background from Banuba

Background replacement from Banuba

Background replacement from Banuba