[navigation]

TL;DR:

- Modern filters are powered by AR, AI, and real-time computer vision;

- Camera filters add value in social media, video calling, dating, education, editing, and gaming apps. They increase engagement, boost retention, and enhance personalization;

- Real-time camera filters are possible in React Native, with native integrations;

- The most stable, scalable option is using a dedicated camera filter SDK like Banuba’s Face AR SDK

- Setup requires native configuration for both iOS and Android;

- Test on multiple devices and in real-world conditions to ensure consistent UX;

- Most integration issues come from native misconfiguration or permission handling;

- Built-in tools and effects reduce dev time, making it ideal for apps that scale.

Understanding Camera Image Filters in React Native

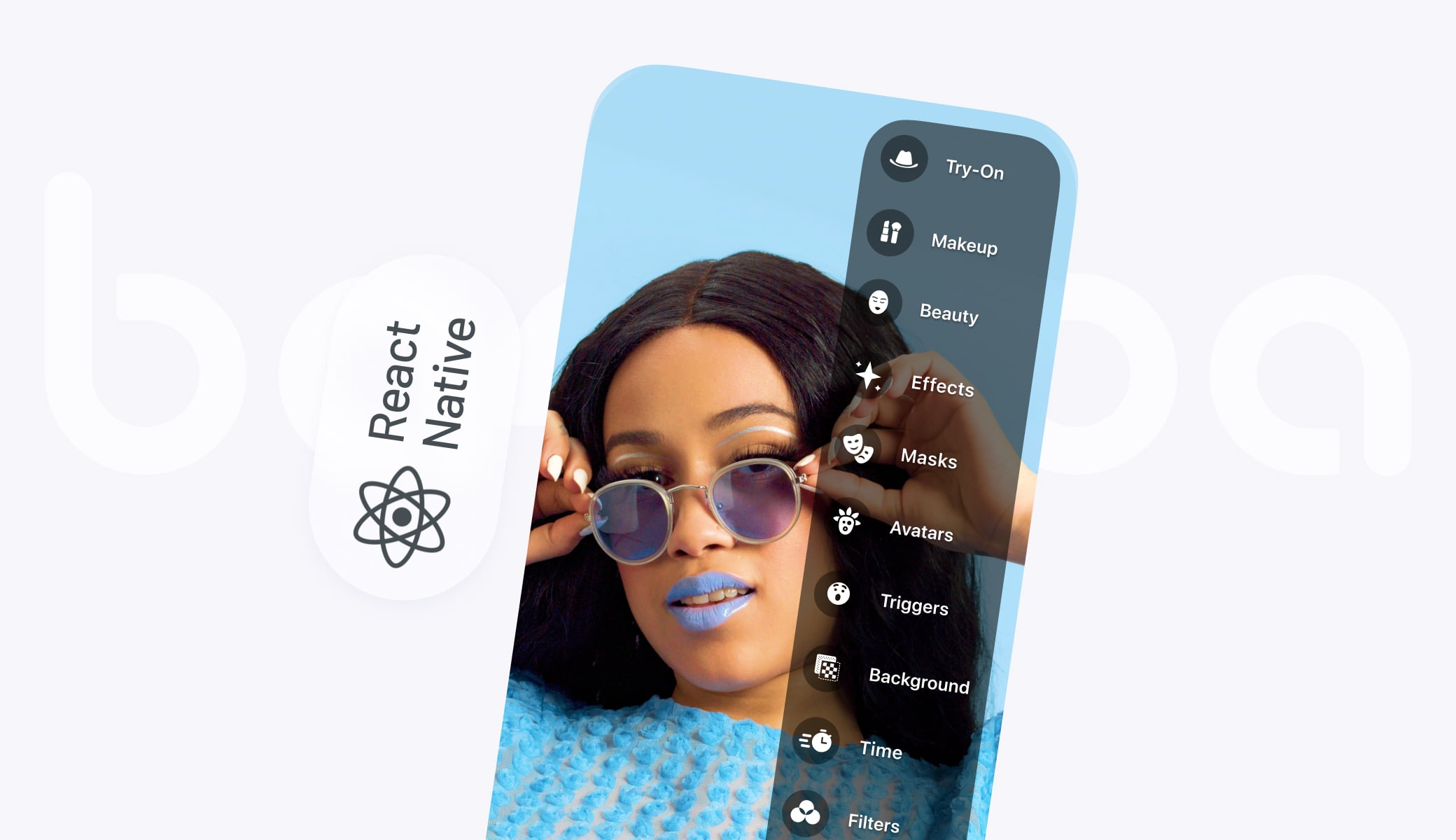

A camera image filter is a visual effect applied to a real-time video feed. But today’s filters go far beyond sepia tones or dog ears. They leverage augmented reality, computer vision, and face tracking to create dynamic, interactive effects that respond to user expressions, movements, or gestures.

Below are the most common types supported in React Native apps:

- LUTs (Instagram filters). Color correction presets, like sepia or black-and-white.

- Face masks. 2D or 3D objects that cover the users’ faces or change them completely.

- Virtual backgrounds. Effects that segment out the human in the picture and replace everything else. Works as a “green screen” or helps protect privacy in video calls.

- Beautification (touch-up). Smoothing skin, removing blemishes, whitening teeth, evening out skin tone, and other cosmetic improvements.

- Face editing. Virtually changing the shape of the user’s face and facial features.

- Virtual makeup. A React Native image filter that adds mascara, lipstick, hair color, and other virtual beauty products.

- Video effects. Various changes to the video feed, e.g., slo-mo, rapid, rave, etc.

- Try-on. Checking out virtual items (eyeglasses, hats, jewelry, etc.) as if they were real.

- Avatars. Replacing the user’s face and possibly body with a 3D character that copies their facial expressions.

- Triggers. Animated effects that launch when the user takes a certain action (smiles, frowns, shows a “victory” hand sign, etc.).

Go for all of the React Native augmented reality face masks or make a selection — the choice depends on your business needs and target audience.

Key Takeaways:

- Modern filters are powered by AR, AI, and real-time computer vision;

- There are 10 common types of React Native camera image filters.

Top Use Cases for Camera Filters in React Native Apps

Camera filters aren’t just fun. They’re strategic tools that improve user experience, retention, and monetization across industries. Below are the top real-world applications of camera image filters in React Native apps:

- Social media. TikTok, the most popular short video platform, is one of the driving forces behind the popularity of filters. But no matter your focus, react native image filters like Instagram promote the creation of viral content and increase user engagement.

- Video calling software. React native augmented reality face masks protect privacy (through background replacement) and add fun to casual conversations.

- Dating apps. Using filters and effects is proven to increase user retention in dating apps.

- eLearning. React Native image filters improve learning outcomes in educational apps for both children and adults.

- Photo/video editors. Various effects are now an expected feature in mobile photo and video editing apps.

- Games. Facial expressions can control the game's flow, and masks can serve for player avatar customization and reward.

Key Takeaways

- Camera filters add value in social media, video calling, dating, education, editing, and gaming apps;

- They increase engagement, boost retention, and enhance personalization.

Technical Approaches to Implementing Camera Filters

So, is it possible to add real-time effects/filters to the camera in React Native? Yes, but it requires native-level performance and integration. While React Native provides flexibility and a shared codebase for both major mobile platforms, it doesn’t natively support GPU-intensive operations like real-time face tracking or AR rendering. Bridging to optimized native modules or using dedicated SDKs becomes critical.

Let’s explore the most common technical approaches to implementing camera image filters in React Native.

Dedicated SDKs (Recommended)

The most reliable path is integrating a cross-platform SDK like Banuba’s Face AR SDK, which handles:

- Face detection and 3D tracking;

- Real-time filter rendering;

- Beautification and virtual makeup;

- Optimization for both iOS and Android.

When choosing this option, consider the following:

Pros:

- Drastically decreased development time thanks to easy integration;

- Prebuilt modules for common features like face detection, beautification, and AR masks;

- Cross-platform compatibility (iOS, Android) with React Native bridges or wrappers;

- Performance optimization via native code, GPU acceleration, and compressed models;

- Access to a large library of ready-made filters and effects;

- Enterprise SDKs often include support, documentation, and analytics;

- Lower initial investment that frees up budget for other things (e.g. marketing or development of extra features);

Cons:

- Require native setup for each platform;

- Vendor lock-in risks if migrating to another SDK later.

Best for: production-grade apps that need consistency, speed, and high-quality effects.

Vision Camera + Skia

Vision Camera (by Margelo) is a high-performance camera module for React Native. Paired with Skia (2D graphics rendering), it can create custom filters like LUTs or basic visual overlays.

Pros:

- Fully JS-bridge-free using JSI;

- Strong community support;

- Good for lightweight effects;

Cons:

- No built-in face tracking;

Requires manual implementation of most filter logic;

- Performance varies based on effect complexity.

Best for: custom projects with limited AR needs or in-house graphics teams.

MLKit, TorchScript + Custom Bridges

Some teams integrate Google MLKit or TorchScript models for face detection or segmentation. These are paired with custom rendering logic using OpenGL or Skia.

Pros:

- Flexible and powerful;

- Useful for specific detection tasks (e.g., emotion recognition).

Cons:

- High development overhead;

Native bridging and sync issues;

- Limited real-time performance without GPU optimization.

Best for: research or highly specialized filter implementations.

WebGL / Three.js (WebView-Based)

It’s technically possible to render filters in React Native using WebGL (via Three.js and a WebView), but it comes with heavy limitations:

- Poor camera access integration;

- Low frame rates;

- Incompatibility with native video capture;

- Inconsistent across devices.

Not recommended for production use.

Step-by-Step Guide: Adding Camera Filters with Banuba Face AR SDK

Let’s review how to set up camera filters in React Native using the example of Banuba’s Face AR SDK. Below is a step-by-step React Native image filter installation process.

Step 1: Request Access and Get a Trial Token

You can try Banuba’s Face AR SDK for free during a 14-day trial period. Just message us or get in touch via the contact form.

You will be able to access all the products — Face AR SDK, Video Editor SDK, and AR Cloud. The latter is used to store the effects you have and let users download them as needed. This is done to decrease the app size, as a single AR mask could take 2-3 MBs of space.

Step 2: Choose Your Product

Both products you get — Face AR SDK and Video Editor SDK — offer React Native camera filters. Choosing the right one depends on when and how you want to apply visual effects:

- Face AR SDK is for real-time camera effects (masks, filters, makeup, avatars, etc.), AR-powered selfies etc. It is designed for social media, video calls, live streaming, etc.;

- Video Editor SDK is for post-processing effects or timeline-based editing with filters applied to a video in a user's library or one being recorded.

You can use both if you require two sets of features, as the products are fully compatible.

Step 3: Install the SDK in Your React Native Project

A detailed setup guide is available on GitHub, and you can also study the dev documentation, but here’s the general outline:

a) For Android:

- Add Banuba’s Maven repository and SDK credentials to your build.gradle.

- Enable Camera, Internet, and Microphone permissions.

- Configure native modules in MainApplication.java.

[code]

// android/build.gradle

allprojects {

repositories {

maven { url 'https://repo.banuba.com/repository/maven-public' }

}

}

[/code]

b) For iOS:

- Use CocoaPods to install the SDK via Podfile.

- Set up camera/mic permissions in Info.plist (e.g., NSCameraUsageDescription).

- Link native modules via a bridging header.

[code] # ios/Podfile

pod 'BanubaFaceARSDK'

[/code]

Note: Expo is not supported. You must use React Native CLI with native modules enabled.

Step 4: Add Effects and Integrate

Once the SDK is installed and native modules are configured, the next step is to bring filters to life inside your React Native app.

Banuba uses a central class called BanubaSdkManager (Android) or BanubaSdk (iOS) that communicates with your JavaScript code through native bridges.

Ensure filters are in your project’s assets directory under effects/masks.

Example usage in JavaScript:

[code]javascript

import { NativeModules } from 'react-native';

const { BanubaSdkManager } = NativeModules;

// To apply a filter

BanubaSdkManager.loadEffect('effects/masks/Beauty');

// To remove a filter

BanubaSdkManager.unloadEffect();

[/code]

Step 5: Permissions and Compliance

- On iOS: add NSCameraUsageDescription and NSMicrophoneUsageDescription to Info.plist.

- On Android: request CAMERA and RECORD_AUDIO at runtime.

Example (Android permissions in JS):

[code]javascript

import { PermissionsAndroid } from 'react-native';

async function requestPermissions() {

await PermissionsAndroid.requestMultiple([ PermissionsAndroid.PERMISSIONS.CAMERA, PermissionsAndroid.PERMISSIONS.RECORD_AUDIO,

]);

}

[/code]

For GDPR compliance, Banuba’s SDK processes all data on-device. No personal data is sent to external servers.

Key Takeaways

- Banuba’s Face AR SDK enables high-quality filters in React Native with real-time rendering and face tracking;

- Setup requires native configuration for both iOS and Android;

- Filters are loaded and controlled via JavaScript modules, while rendering is handled natively for smooth performance.

Performance Optimization and Best Practices

Real-time camera filters can be CPU- and GPU-intensive even on upscale devices, let alone low-end ones. We've gathered the best practices for rendering, asset management, and native integration to ensure smooth performance across your React Native app.

Use Native Frame Processors (JSI/TurboModules)

Banuba’s SDK is built with JSI (JavaScript Interface) and native modules, bypassing the traditional React Native bridge. This ensures:

- Lower latency between JS and native;

- Real-time video feed processing at 30–60 FPS;

- Smooth UI interactions even when filters are active.

Avoid using third-party camera modules that rely on the React Native bridge. They introduce lag and sync issues.

Adapt Camera Resolution Based on Device

Lower-end devices may struggle with full HD resolution. Consider:

- Reducing preview resolution (e.g., from 1080p to 720p);

- Offering adaptive quality settings based on device benchmarks.

Banuba internally manages this through its optimized pipeline, but you can also expose settings to users for manual control.

Preload and Cache Filter Assets

Preloading filters during app launch or screen transitions prevents in-session lag when switching effects. Store frequently used filters locally and cache new ones in a background thread or when the app is inactive.

Use Offscreen Rendering Pipelines

If building custom effects or overlays, render them offscreen and composite them to the camera feed. This avoids blocking the main UI thread.

Banuba handles this internally, but if combining third-party renderers (e.g., Skia or Lottie), ensure they run off the main thread.

Test Across a Range of Devices

What runs fine on a Pixel 7 might stutter on a Moto G. Build a performance matrix across:

- iOS vs Android;

- High-end vs low-end;

- Portrait vs landscape mode;

- With and without other effects or UI animations.

Use Banuba’s built-in analytics or performance logging to identify bottlenecks.

Key Takeaways

- Use JSI-based native modules like Banuba’s for low-latency performance;

- Preload and cache filters to prevent UI jank during transitions;

- Optimize resolution based on device class — balance quality and speed;

- Avoid blocking the main thread with additional renderers or heavy logic;

- Test on multiple devices and in real-world conditions to ensure consistent UX.

Common Challenges and Solutions

Below are the most frequently encountered problems and how to fix them fast.

Android/iOS Build Errors

Symptoms:

- Gradle or CocoaPods fail during build;

- App crashes on startup;

- undefined is not a function errors from native modules.

Solutions:

- Ensure all native dependencies are linked properly;

- Run pod install for iOS inside the ios/ folder;

- Check that the minimum Android SDK version is 21+;

- Clean builds:

[code]bash

cd android && ./gradlew clean

cd ios && xcodebuild clean

[/code]

Filters Not Displaying or Applying

Symptoms:

- The camera opens, but filters don’t appear;

- Only a black screen shows;

- Filters load but don’t respond to face movement.

Solutions:

- Verify the correct path to your filter assets (e.g., 'effects/masks/Beauty')

- Ensure camera and mic permissions are granted at runtime;

- Test with a known working filter from Banuba’s SDK package;

- Confirm the device has a working camera and isn’t using a virtual webcam/emulator.

Video or Photo Capture Not Working

Symptoms:

- Filters work live, but captured videos/photos are blank or misaligned;

App crashes on startRecording() or takePhoto().

Solutions:

- Use Banuba’s built-in capture methods:

[code]javascript

BanubaSdkManager.takePhoto();

BanubaSdkManager.startVideoRecording();

[/code]

- Ensure correct permissions: WRITE_EXTERNAL_STORAGE, RECORD_AUDIO;

- Check that the output path and format are correctly set on Android.

SDK Version Conflicts or Updates Break Integration

Symptoms:

- SDK stops working after React Native upgrade;

- Deprecated method warnings.

Solutions:

- Use Banuba’s latest version from GitHub;

- Match React Native and SDK versions noted in Banuba’s changelog;

- After updating:

- Re-link native modules;

Clean build folders;

- Reinstall pods and Android dependencies.

Expo Not Supported

Symptoms:

- SDK install fails or throws native module errors;

- App compiles, but filters never appear.

Solutions:

- Banuba’s Face AR SDK requires native module support, which Expo Go does not provide;

- Solution: Use React Native CLI or eject from Expo (not recommended unless necessary).

Key Takeaways

- Most integration issues come from native misconfiguration or permission handling;

- Always test using known working filters before debugging custom ones;

- Use Banuba’s provided methods for capture and rendering to avoid compatibility issues;

- Expo is not supported; Use React Native CLI for full compatibility.

Why Banuba Face AR SDK is the Top Choice

When building high-performance AR filters in React Native, Banuba’s Face AR SDK offers a complete, production-ready toolkit for implementing high-performance AR filters in React Native, combining advanced tracking, native optimization, and a smooth developer experience.

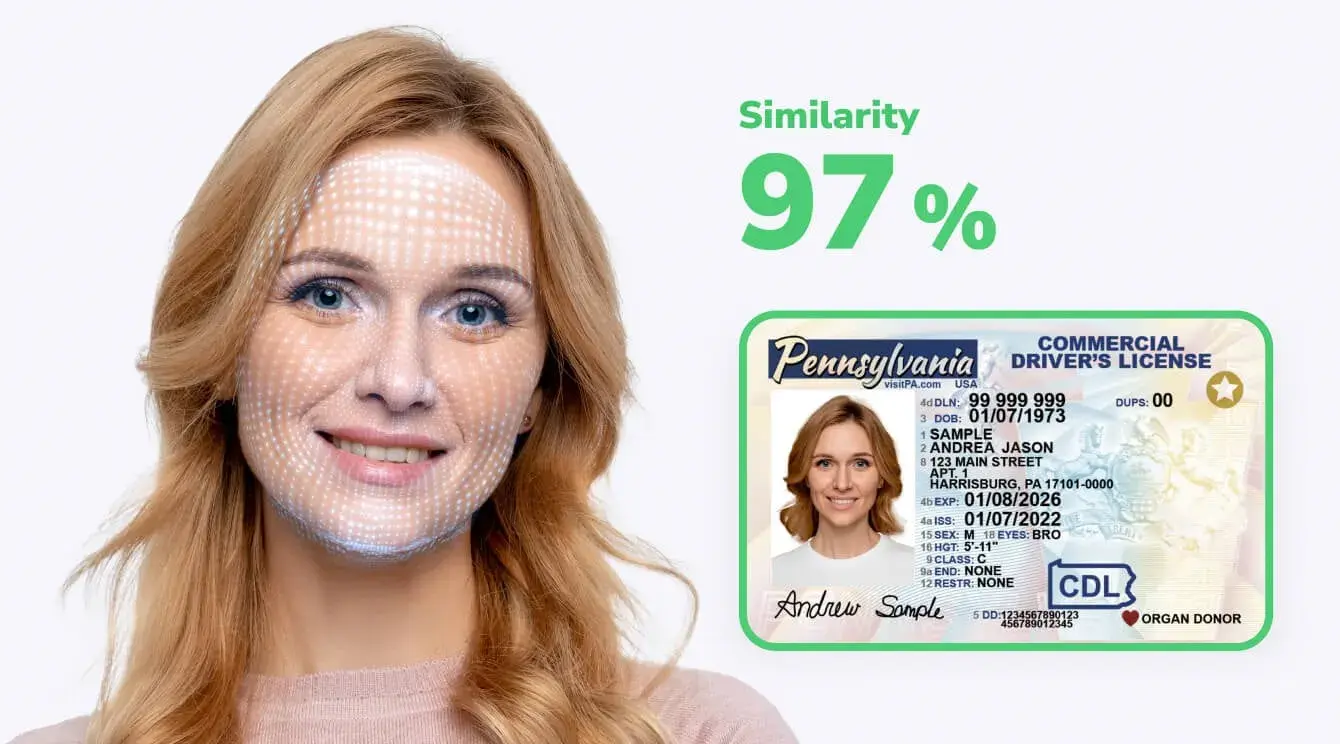

What distinguishes Banuba’s Face AR SDK among competitors?

- Patented face tracking with 3,308 facial points;

- Supports 360° rotation, multi-face detection, and low-light environments;

- Stable tracking even with 70% facial occlusion;

- On-device processing ensures fast response, privacy compliance, and low power consumption;

- Official React Native bridge using JSI/TurboModules;

- Clean API for loading, switching, and customizing effects;

- Cross-platform consistency (iOS + Android);

- Prebuilt effects and LUTs make it easy to get started with room to scale;

- Banuba Studio for previewing and exporting filters;

- 22,000+ digitized effects ready to integrate;

- Optional Admin Panel for remote updates and analytics.

“We know what it’s like to fight the bridge, chase FPS drops, and debug filters for three platforms. That’s why our Face AR SDK gives React Native developers stable AR tools that scale — and stay out of the way.” — Artem Harytonau, CTO at Banuba

Key Takeaways

- Banuba offers a proven SDK with high-accuracy tracking and robust AR rendering;

- It’s React Native–ready, with full cross-platform support and optimized performance;

- Built-in tools and effects reduce dev time, making it ideal for apps that scale.

Reference list

Banuba. (n.d.). Banuba Face AR SDK documentation. https://docs.banuba.com/far-sdk

Banuba. (n.d.). React Native Quickstart. GitHub. https://github.com/Banuba/quickstart-react-native

DeepAR. (n.d.). DeepAR SDK documentation. https://docs.deepar.ai/

Expo. (n.d.). Customizing your Expo app. https://docs.expo.dev/workflow/customizing/

Expo. (n.d.). Expo config plugins. https://docs.expo.dev/guides/config-plugins/

Facebook. (n.d.). React Native environment setup. https://reactnative.dev/docs/environment-setup

Facebook. (n.d.). Troubleshooting common issues. https://reactnative.dev/docs/troubleshooting

Facebook. (n.d.). Using PermissionsAndroid. https://reactnative.dev/docs/permissionsandroid

Google Developers. (n.d.). Android permissions reference. https://developer.android.com/reference/android/Manifest.permission

Google Developers. (n.d.). ML Kit: Face detection. https://developers.google.com/ml-kit/vision/face-detection

Mrousavy. (n.d.). react-native-vision-camera. GitHub. https://github.com/mrousavy/react-native-vision-camera

Mrousavy. (n.d.). Issue #2763 – VisionCamera freezes on Android 13+. GitHub. https://github.com/mrousavy/react-native-vision-camera/issues/2763

React Native Community. (n.d.). React Native discussions and proposals. GitHub. https://github.com/react-native-community/discussions-and-proposals

React Native Community. (n.d.). Upgrade helper tool. https://react-native-community.github.io/upgrade-helper/

Shopify. (n.d.). react-native-skia. GitHub. https://github.com/Shopify/react-native-skia

Snap Inc. (n.d.). Augmented reality with Snapchat Lenses. https://ar.snap.com/

Stack Overflow user anonymous. (2018, August 23). Using face detection feature of react-native-camera. Stack Overflow. https://stackoverflow.com/questions/51995285/using-face-detection-feature-of-react-native-camera

Stack Overflow user anonymous. (2020, November 9). Module not found after upgrading React Native. Stack Overflow. https://stackoverflow.com/questions/64751804/module-not-found-after-upgrading-react-native

Statista Research Department. (2022, December 6). Share of smartphone users who regularly use camera and photo editing apps. https://www.statista.com/chart/28913/share-of-smartphone-users-who-regularly-use-camera-and-photo-editing-apps/

Three.js. (n.d.). JavaScript 3D library. https://threejs.org/

YouTube. (2023, May 18). Building TikTok-style filters with Banuba SDK [Video]. https://www.youtube.com/watch?t=3465&v=0294iXEPO4Y&feature=youtu.be